Apache Kafka: 101

This blog is part of the series where we discuss 101 concepts from Ground Zero for an audience with limited starting knowledge. This article comes in the Intermediate Level Series since it involves understanding the primitives of Apache Kafka and its importance as the backbone of the async-based distributed architecture.

SideCar and ServiceMesh 101

Data Encryption 101

Database Replication 101

Caching Strategy 101

Kubernetes Deployment 101

Async Communication 101

HTTPS 101

What is Apache Kafka?

Kafka is a real-time data streaming platform built to overcome the limitations of synchronous processing of traditional monolithic architecture. Commonly used as a distributed messaging layer, it is a one-stop solution for low latency and high traffic micro-service-based applications.

Generally deployed as a cluster of singular/multiple nodes, which can span across availability zones and regions, it provides resilient message delivery guarantee options.

Basic Architecture

Producers

Producers are applications which generate messages/data streams. They connect to a Kafka Cluster using a Broker or a Zookeeper URL.

Consumers

Consumers are applications which consume single messages/batch streams.

Topic

Topics are category/logical separation for storing messages in a Kafka cluster. Kafka retains message records as logs. Offsets determine the relative positioning of logs for producers’/consumers consumption.

Partition

Topics are subdivided into partitions, the smallest storage unit in the Kafka hierarchy. Each partition stores single log files replicated across nodes distributed into multiple brokers for fault tolerance.

Broker

Kafka clusters consist of multiple servers for high- availability needs, known as brokers. They also help in message data replicated across partitions.

Zookeeper

The zookeeper helps in the activities for the management of the Kafka cluster, such as coordinating between the brokers, choosing the leader partition for replication etc.

Writing Strategies

Producers writing messages to the Kafka cluster can opt for different strategies depending on the resiliency vs latency needs.

Ack=0

The producer doesn’t wait for an acknowledgement from the Kafka broker to mark the publish event as successful. Though this method is the fastest, it is the least reliable for applications.

Ack=1

In this strategy, the producer waits for an acknowledgement from the leader partition of a given topic. Hence it is considered safe for production usage.

Ack= 2

In this strategy, the producer waits for an acknowledgement from the leader partition of a given topic only after it has written it to other replication copies. Hence it is considered the slowest technique.

Reading Strategies

Messages published on the topics can be read by the consumers using different read semantics.

At Most Once

In this default message delivery semantics, Consumers will read/consume messages, not more than once.

Exactly Once

In this scheme, a given published message will be read only once by the consumers.

At-least Once

This fault-resilient scheme guarantees the message is read at least once by the pool of available consumers however, it can lead to the same message being read multiple times.

Local Setup

Docker Compose File

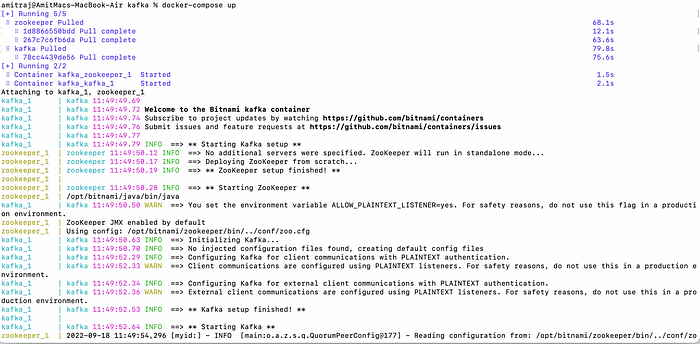

Running Locally

docker-compose-up

Summary

This blog talks about the basics of getting started with Kafka as a messaging system and a local setup of Kafka Cluster. Given the breadth of the topic, we will talk in detail about other elements of the Arch such as Business Use-cases, Kafka Stream API or kSql which we will talk about in a later advanced blog.

For feedback, please drop a message to amit[dot]894[at]gmail[dot]com or reach out to any of the links at https://about.me/amit_raj.